Attention Settings

A practical concept for regulating attention-extracting products

You deserve transparency and control over how you’re being manipulated.

Attention Settings demonstrates practical pathways for regulating attention-extracting products to increase consumer agency and address an epidemic of phone addiction.

The EU’s recently introduced Digital Services Act includes a section about ‘dark patterns’:

“Under new rules, ‘dark patterns' are prohibited. Providers of online platforms will be required not to design, organise or operate their online interfaces in a way that deceives, manipulates or otherwise materially distorts or impairs the ability of users of their services to make free and informed decisions.”

The problem is, product design is a shady business — it isn’t black and white. The problem of manipulation and attention extraction goes far beyond dark patterns. Addictive loops are at the core of the user experience of the most popular social media platforms. The lines are blurry.

Attention Settings give users awareness over how they're being manipulated and tools to selectively opt-out of the most engaging features — to reclaim their attentional, cognitive, and behavioral sovereignty.

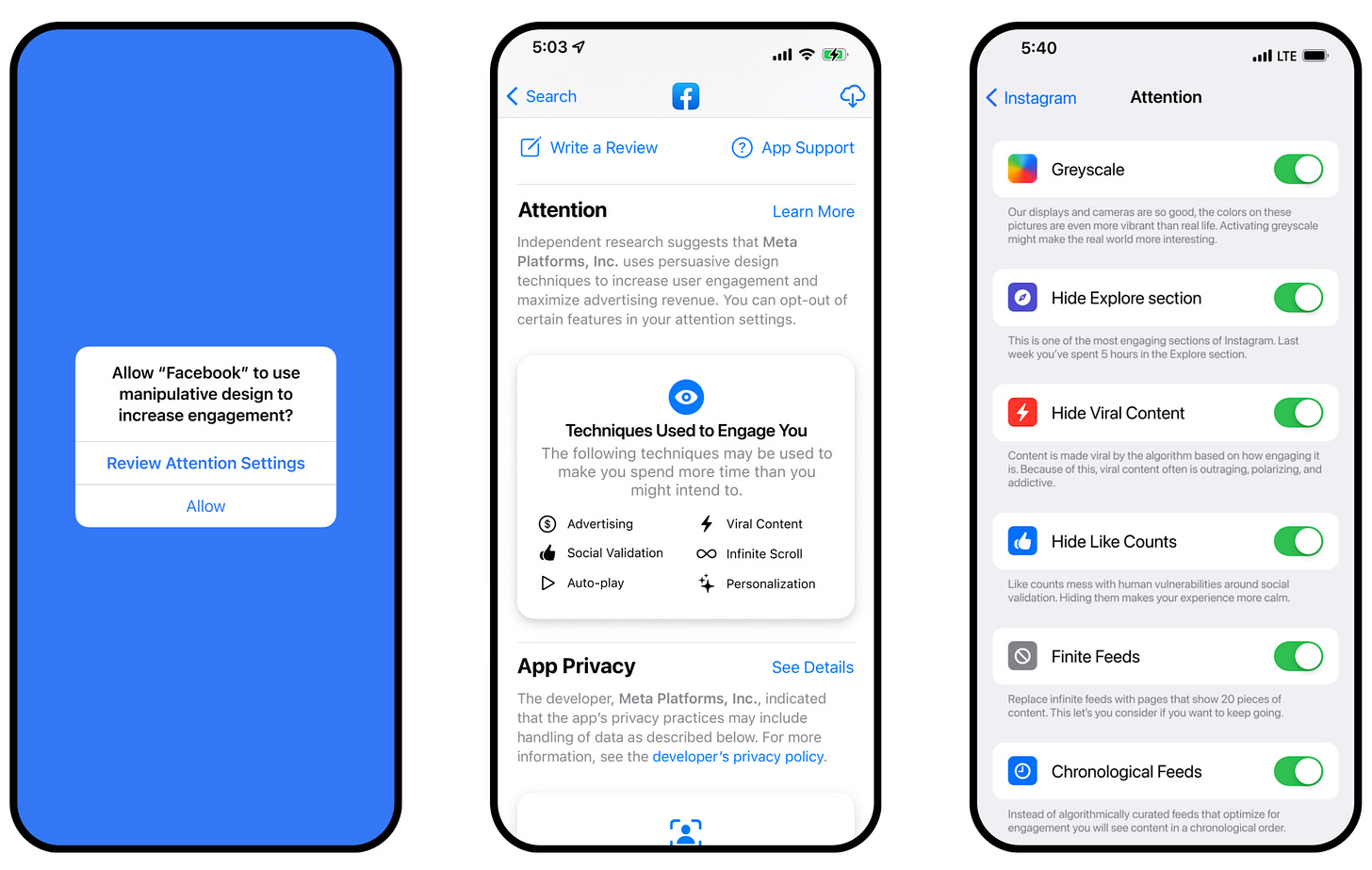

Here’s how it could work…

Products with over 10 million users could be required to provide a) transparency about the techniques they use to increase engagement and b) the option to opt-out of these techniques and features — as a condition for passing App Review.

If a product uses persuasive design, every one of these techniques and features should be listed on the AppStore nutrition card. The Attention Settings then provide the user with the option to opt-out. There could be an educational element to inform users about how these techniques work and why they might want to consider opting out.

Here are some examples of what these changes might look like in different apps:

Facebook

Imagine you want to use Facebook for the purpose you signed up for: connecting with family and friends. The ability to choose a chronological feed, hide all short-form videos like Reels, and disable suggested content would make all the difference. The one on the right would be on your terms, showing you the content you want to see, without seducing you into binge-watching viral videos.

YouTube

When I open YouTube, I mostly have an intention for what I want to do. Like, maybe I want to play a song, show a specific video to a friend, or learn how to make a perfect poached egg.

But then YouTube shoves an auto-playing ad in my face and shows me an infinite feed of videos and shorts that are probably really fun to watch, but that will also cost me 2 hours that I didn’t mean to spend. With 70% of watch-time coming from videos suggested by their algorithm, it’s never about that one video you consciously signed up for.

With Attention Settings, you could turn off suggested videos, prevent auto-play, and remove the Shorts tab.

Instagram

Just like with Facebook, time on Instagram is much more worthwhile when you get to see what your friends have been up to than when you’re watching a bunch of ‘entertaining’ videos.

With a chronological feed, “Hide Explore Section”, and “Hide Suggested Content”, it might be viable again for me to have Instagram on my phone and occasionally check what the ~100 people I follow have been up to.

TikTok

There’s some genuinely wholesome content on TikTok. At the same time, the algorithm underlying the For You Page is incredibly good at keeping us scrolling and watching. Attention Settings could help users engage with the app on their own terms — seeing the content they want to see, without getting sucked into binge-watching sessions.

These are merely examples of the kinds of things that could be done and would be helpful. A more comprehensive list could be put together by a consortium of independent organizations that track the leading edge of this space.

The basic principle is this: If you use design techniques that increase user engagement, you need to provide the user with the option to opt-out.

The power asymmetry between the design teams at large-scale online platforms and an average user is enormous. On one side of the screen there is weapons-grade behavior modification technology, on the other side there are isolated individuals who have no idea what’s going.

Their business model incentivizes these companies to maximize engagement at all costs. That means they can’t be trusted to honor their duty of care to act in the users best interest.

In order to level the playing field and re-establish the symmetry of power, the user needs to understand what’s going on and have control to turn these things off selectively.

These companies have nation-state scale and utilities-grade network effects and thereby user lock-in. Currently, that means most are forced to take whatever they offer, regardless of how manipulative it is and how badly it undermines your mental health — because the alternative is a tradeoff they can’t afford: to leave behind social connection and participation.

When you’re forced to stay, you should at least be empowered to stay on your terms, not just in when it comes to privacy but especially when it comes to attention and manipulation.

It's an audacious proposal, and I hear your doubts:

This interferes with 3rd party products in unprecedented ways

Legal and political pushback is almost inevitable

On what basis could we possibly do this?

This will overwhelm users

These are valid concerns, yet I will ask you to put them aside for a minute. If we were able to implement this on a waterproof legal basis, at a user-friendly level of simplicity — would it be the right thing to do?

I believe so. Let’s talk about a possible basis of legitimacy.

Legal Legitimacy

Disclaimer: I’m not a legal expert.

There are two fundamental questions to answer:

1: What is classified as engagement-maximizing, and by whom?

Who is to say that a particular feature is attention-maximizing? Where is the line between good UX and manipulation? I believe this is a relatively straightforward question to answer. A consortium of independent organizations, informed by foundational research (funded by Apple or legislators), could surely come up with practical definitions.

2: What might be a sufficient basis of legal legitimacy?

The attention industry will push back on this, and there are a couple of directions they could take. On what basis could Apple or the EU possibly set these requirements?

Two frames seem especially promising as a basis of legitimacy:

Accessibility

Instead of framing engagement-maximizing products as "bad for everyone", there is a reasonably straightforward argument that problematic smartphone use and social media addiction are handicapping a minority of the population.

From poor self-control to mental health issues and precarious life circumstances, regardless of what causes someone to be more vulnerable to manipulative design and cheap dopamine, the vicious feedback loops of compulsive behavior and increased addiction can have catastrophic consequences on people's wellbeing and productivity.

Not providing the comprehensive tooling required for neurodiverse and otherwise disadvantaged people to participate in the use of these products safely and effectively means we’re neglecting to support some of our most vulnerable populations.

Undue Influence

Persuasive technologies, especially social media, exert undue influence — a severe and systematic form of manipulation that is legally recognized in other contexts. Platform providers like Apple have a fiduciary responsibility to protect their customers from such undue influence.

Based on the technical legal definitions provided by psychologists, persuasive technologies have crossed the threshold from persuasion to coercion — and thus need to be classified as undue influence.1

I believe a solid legal specification of Undue Influence in the context of persuasive tech is possible. Four qualities stand out:

Privileged Information: With sophisticated psychological profiles, thousands of data points, and years of meta-data on every user, social media companies have a comprehensive set of privileged information.

Statistical Influenceability: If a particular feature or design change influences real-world behaviors with statistical reliability, that's a sound basis for undue influence.

Asymmetry of Power: The platforms’ data and its ability to use it to modify user behavior are orders of magnitude greater than the average user’s awareness, understanding, and ability to protect themselves from that manipulation.

Lack of Informed Consent: Most users have no clue that an algorithm — let alone weapons-grade behavioral modification technology — is playing against them. Facebook's research showed that they can shape emotions and behavior without triggering the users' awareness.

The cultural, ethical, and philosophical case for limbic capitalism's immorality has already been made extensively. What is undue influence, if not ruthless attention capture and behavior modification, motivated by advertising revenue?

It is a worthwhile cause. This is an opportunity to create a cultural inflection point, to take a stand on the right side of history.

It’s also an opportunity to directly improve the user experience of hundreds of millions of people, helping them get less distracted from the lives they want to live.

But why stop with Attention Settings? There are countless opportunities for minimizing unintended social media usage. Here are our best ideas for how to help people be more intentional in their technology usage.

Mindful UX Interventions and Other Suggestions

Why stop at Attention Settings? There is a whole world of possibilities for how our digital environments could protect our attention. We put together a whole library of humane tech products, concepts, and ideas — but here are three ideas we want to highlight:

App Opening Modals

iOS could provide users with the option to enjoy a mindful moment before continuing with distracting apps and provide Siri suggestions with activities that might be more attractive than mindless scrolling.

While you can already have this experience with apps like one sec or Potential, it takes some setup and is a less ideal UX than what Apple could build. Even better, Apple could provide an AttentionKit and empower developers to trigger modals like this on top of other apps, based eg. on data from the ScreenTime API.

Give Researchers Access to Screen Time Data

We need to understand better the science of problematic smartphone use and effective interventions. This is a no-brainer, and it’s a shame it hasn’t been done already.

Unbundle Messengers

Very large platforms like Facebook and TikTok represent the primary social environment for a whole generation of teenagers. Their current design is a serious threat to this generation’s mental health, yet opting out of the service entirely comes at great social cost and exclusion.

These very large platforms (over 50 million users) should be required to offer dedicated messenger apps that don’t include any persuasive design techniques. That way, social participation remains possible without having to sacrifice one’s mental health.

Conclusion

This concept doesn’t solve the root problem of misaligned incentives due to the advertising-based business model. But it levels the playing field in a really important way: It gives people control over their experience.

Attention Settings are a practical pathway for policymakers and ecosystem providers to give users the tools they need to make these products work as intended.

Attention Settings have teeth because they allow users to interact with these products on their own terms. They don’t need to accept the constant lure of unhealthy doom-scrolling and binge-watching. They get the opportunity to exercise their attention and agency freely — without having to lock themselves out of the public sphere.

Social Media Enables Undue Influence, The Consilience Project, 2021